Yichao Cai

I am a third-year Ph.D. student in Computer Science at the Australian Institute for Machine Learning (AIML), University of Adelaide, advised by Prof. Javen Qinfeng Shi. Previously, I received my M.Sc. and B.Eng. in Instrument Science from Wuhan University of Technology.

My research studies multimodal learning and identifiable, causal representation learning, with a focus on how language supervision shapes alignment and semantic factorization in vision–language models. My goal is to build interpretable, reliable, and human-aligned AI systems that uncover amodal semantic representations underlying complex multimodal data.

News

-

Happy to share that I’ll be attending MLSS Melbourne 2026 for two weeks (Feb 2–13, 2026). Looking forward to learning from world-class speakers and connecting with the community.

View older news

-

I served as a guest lecturer in the Statistical Machine Learning course, presenting recent advances in vision–language modeling. See the lecture slides.

-

Excited to share that our work on cross-modal misalignment was selected as a Spotlight (top 3.2%) at NeurIPS 2025!

-

Check out our new preprint: "On the Value of Cross-Modal Misalignment in Multimodal Representation Learning".

-

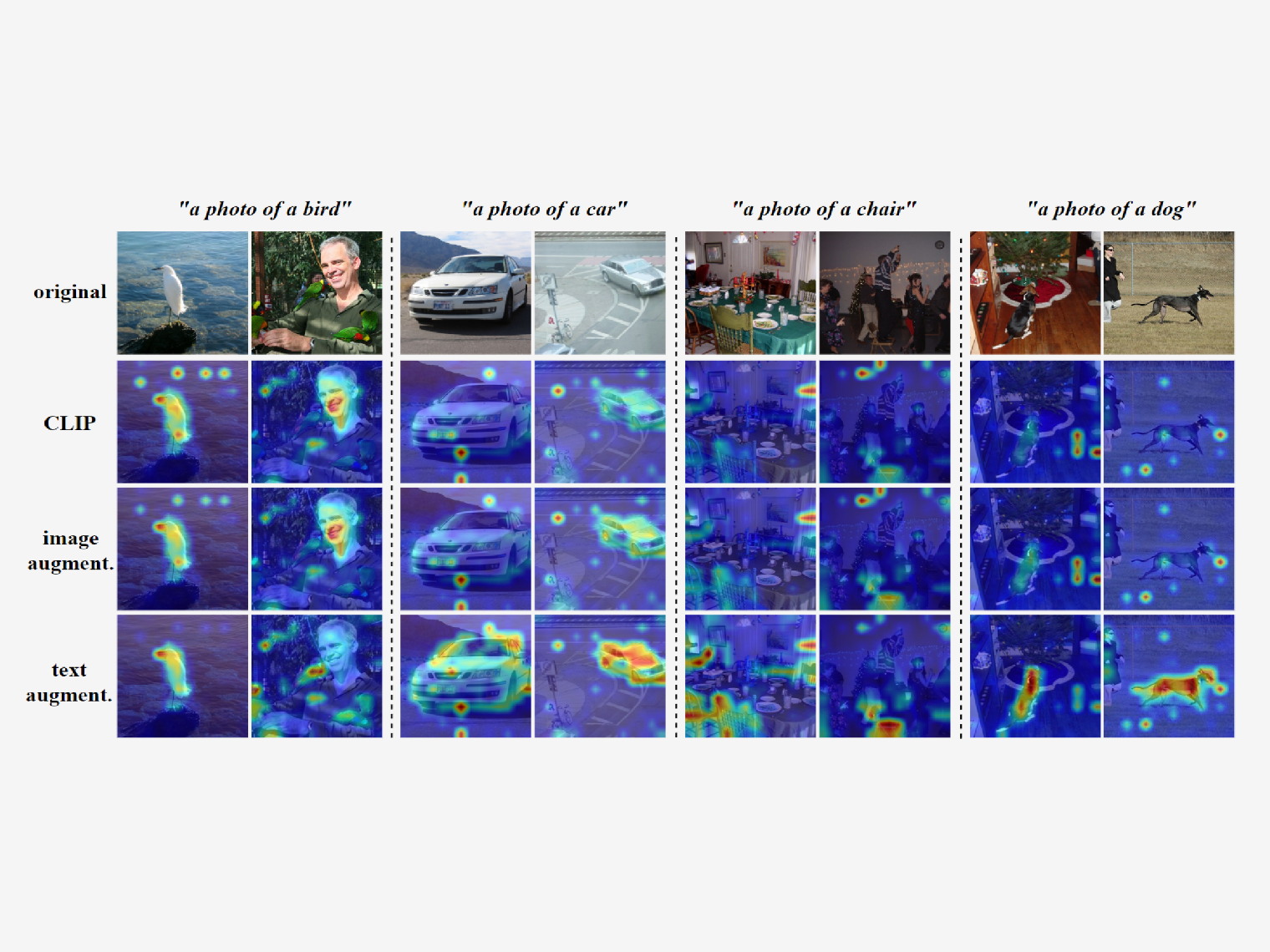

Our work, "CLAP: Isolating Content from Style through Contrastive Learning with Augmented Prompts", is accepted to appear at ECCV 2024.

Publications

Academic Services

- Reviewing - TMLR, ICLR (2026), ICML (2026).

Teaching

- Guest Lecturer & Head Tutor - Statistical Machine Learning (Semester 2, 2025) @ University of Adelaide

- Teaching Assistant - Using Machine Learning Tools (Trimester 2, 2025) @ University of Adelaide

- Teaching Assistant - Concepts in AI and ML (Trimester 1, 2025) @ University of Adelaide

Blog

Some notes and thoughts on machine learning and representation learning: ✦ Browse posts ✦

Honors & Awards

Contact

Email: yichao.cai@adelaide.edu.au

Office: LG.25.04, AIML Building, Lot Fourteen, Corner of North Terrace & Frome Road, Adelaide SA 5000, Australia